Authors: CMDR Linda Morris, RAN, CAAM Deputy Director, Maritime System Program Office, Governance

Alison Court, CFAM, CAMA Manager, Asset Performance Group, Industry Service Provider

Jim Kennedy, CPEng, CFAM, CAMA; Asset Management Specialist, Maritime System Program Office, Governance

Abstract #

For many organisations around the globe, ISO 55001 certification has been a strategic component of their Asset Management journey. However, not all organisations consider formal third party certification to be necessary, feasible or in fact desirable for that journey. Other ways of achieving the growth required in asset management capability are available, and for many organisations, may be a more productive journey than ISO Certification alone. This paper will describe the why and how of an Asset Management maturity assessment that drove the improving performance of a support organisation for maritime assets. The role and content of a “mentoring audit” of the Asset Management maturity of the organisation will be described along with the outcomes and lessons learned during the two year journey. Comparisons of the results achieved will be made with another similar program using frameworks drawn from national and international “not for profit bodies”.

Keywords: Asset Management, 55001, Certification, Maturity, Audits

1 INTRODUCTION #

ISO Certification is understood by many as demonstration of an organisation’s management system capacity to achieve a particular set of outcomes. These outcomes are often enunciated in the form of principles such as those that underpin Asset Management. These principles become the basis of a formal management system against which organisations may test themselves. This test is like an athlete in a high jump that is concerned only that the qualifying bar was cleared, not with how much the bar was cleared by.

Without a structured management system an organisation cannot claim an asset management capability. Without a sufficiently mature management system for asset management, the organisation cannot claim a match between the management system capability and the managed asset related risks that is necessary to achieve the organisation’s objectives. In this context, formal certification to the ISO 55001 standard for a management system for asset management is not a guarantee of required management capability. Certification only establishes a single “go/no go” threshold for the listed requirements in the standard.

The Maritime System Program Office (MSPO) involved does not have asset management accountability for the physical assets serviced. Operations belongs to the Royal Australian Navy. Navy outsources the sustainment function to Capability Acquisition and Sustainment Group who, for a variety of reasons, outsource their core management function to an industry service provider (ISP). Two key goals from the scope of work for the ISP were:

- (1.2.3) The Contractor is required to deliver and manage the Services using an asset management approach based on and consistent with ISO 55001:2014 Asset Management.

- (1.2.4c) The Objectives are to implement a structured asset management framework to maximise the value of the Commonwealth’s assets and in doing so minimise the Total Cost of Ownership;

As the program progressed, evidence from assessment of Project plans and related independent audits, indicated that the required Asset Management objectives and expectations were not being achieved. A number of critical Asset Management – Management System Plans (AMSyPs) such as the Enterprise Management Plan had not been approved. While asset management maturity in the organisation was anecdotally considered low, some formal means to assess the level of maturity was required to determine how low that maturity was in the Management System for Asset Management (AMS). An asset management maturity audit of some form was needed.

2 THE PROPOSITION #

A brief to undertake an Asset Management Audit of the ISP was accepted on 3 March 2019. Three alternate approaches to assessing the level of performance of an MSPO in establishing an asset management system framework were:

- An audit process based on the Maintenance Engineering Society of Australia (MESA) original excellence award in maintenance and asset management between 1996 and 2014, which was based on the Australian Business Quality Awards Process. Development would be quick and there is a successful provenance of providing a reasonable coverage of asset management capability.

- An AM maturity assessment using the Asset Management Council maturity tool. This approach uses a large question set (158) to test an organisation’s maturity in asset management. Significant written effort and knowledge of asset management is also required to describe the organisation’s current capability. Many workshops would be required and key staff would need to be made available.

- An independent gap analysis conducted by a certifying agency such as SAI Global or Bureau Veritas. Such a formal analysis, often quite basic, is meant to be a gap closure activity followed by a full ISO Certification effort. In the context of MSPO many independent and mandatory certification activity was already underway thus challenging both the value and available resourcing for yet another independent formal audit.

The brief’s recommendation to adopt Approach 1 above was accepted, with the following key points agreed. The audit would:

- adapt the now obsolete MESA assessment tool from the 1990s which would be upgraded to assess the ISO 55000/1 essentials.

- be conducted by existing internal staff with guidance from the MSPO Asset Management Specialist;

- establish a baseline for the current state of AM maturity;

- gain an understanding of the potential gaps in the ISP asset management capability to allow for future AM capability development programs;

- assess the shape and size of options to close, over the next three years, the identified AM capability gaps; and

- provide a means of assessing the effectiveness of the AM capability defined in a suite of Asset Management System Plans being the plans for the Management System (AMSyPs).

3 DEVELOPING AN AUDIT TOOL #

As attainment of ISO 55001 Certification was not a stated objective for the MSPO, the priority was process development. A process audit tool was developed using the following list of sources:

- ISO 55001:2014 Management system for asset management;

- Australian Quality Awards and Bainbridge Awards assessment criteria;

- MESA Excellence Awards process 1996 to 2014;

- AM Council Asset Management System Model 2015;

- AM Council Capability Delivery Model 2005.

The views of what maturity in Asset Management might look like for an organisation in delivering a process gradually evolved to reflect a combination of the following attributes:

- Is there a defined process to satisfy the posed questions?

- Has that process been implemented and at what level?

- Are measures of achievement collected that allow measurement of process success? and

- Is this information used to continuously improve those processes?

The developed audit process comprised:

- 101 questions – see example set at Table 1,

- across 20 Topic areas such as “Context Setting” – See example at Table 2 and full Topic listing at Figure 1,

- with each question being assessed against four criteria – See example at Table 3, and

- marked on a scale 0 to 9 in 5 pairs (i.e. 0-1, 2-3, 4-5, 6-7, 8-9) – See example at Table 3.

The audit tool was used for both the initial audit and the complete follow on Mentoring Audit. The largest difference between the audits was in the extended involvement of both MSPO Governance and ISP over a 15 month period after the initial audit.

The initial audit required speed and a broad understanding of our current position. To achieve this requirement the following approach was adopted:

- The Auditor was to be certified at CFAM level with AM Council and a Certified Asset Management Auditor (CAMA) with the World Partners in Asset Management.

- Only half of the 101 questions were posed in the interviews – questions were not asked if the general consensus was that a score of zero was most likely.

- Only 3 of the 4 assessment criteria would be valued as “Improvement” was generally not possible due to the lack of documented processes to formally improve or measurements and results to provide data for improvement action.

- Only 15 of 19 Topic areas were assessed as 4 Topic areas were still at the stage of developing initial processes. These 4 Topics were assessed some 6 months later to provide a complete baseline.

- Interviews were conducted one on one by the Auditor with the responsible Lead Manager on the basis that results would not be circulated outside the MSPO. An Observer from the MSPO Commonwealth Governance team was present to assure probity.

- All statements were assumed as honestly given (and no OQE was requested or assessed).

- All interviews were documented and passed to the Interviewees for a fact check.

- Lists of Issues and Opportunities for improvement were noted.

- A final score for each audit Topic was allocated by the Interviewer in conjunction with the Observer.

- The Auditor produced a report on the outcome for the first15 Topics and a second combined report for all Topics.

| Topic 1 | Context Setting (7 Questions) |

| a b

c d e f g |

Does the organisation have a defined list of stakeholders? |

| Is there an assessment of their importance? | |

| Has an associated stakeholder engagement regime been defined? | |

| Is there a list of internal and external issues determined from the stakeholder engagement process? | |

| Has the asset management portfolio of assets been identified? | |

| Is that asset scope clearly identified in a document under quality management control? | |

| Is the scope aligned with the SAMP and AM Policy? |

Table 1 Example Question Set

| Topic 01 | Context Setting |

| Topic 04 | Asset Management Objectives |

| Topic 05 | Culture and Leadership |

| Topic 07 | Decision Making |

| Topic 11 | Resourcing |

| Topic 13 | Outsourcing |

Table 2 – Example Tranche Topic Set

| Score | Process | Implementation | Results | Improvement |

| 0 -1 | Practices & processes do not apply ISO 55000/1 intent or meet a moderate amount of the Process Model | The process is not documented. There is little or no evidence of any systematic achievement of purpose. | Ad hoc and/or poor results achieved.Goals are not identified. | No improvements have been recorded.Improvement goals are not identified. |

| 2-3 | Description here | Description here | Description here | Description here |

| 4-5 | Description here | Description here | Description here | Description here |

| 6-7 | Description here | Description here | Description here | Description here |

| 8-9 | Description here | Description here | Description here | Description here |

Page 3

Table 3 – Example Audit Assessment Criteria Set and Scoring Levels

A key characteristic of the scoring matrix with its 5 levels, is the non-linearity of the scores. That exact level of exponential growth between scoring blocks e.g. “0-1” and “1-2” has yet to be determined. Rule of thumb would indicate that each block is twice as hard to achieve change to the next block i.e. from “0-1” to “1-2” versus “1-2” to “3-4”. Thus going from a score of 1 to a score of 6 is 4 times as difficult as from a score of 1 to a score of 3. This non linearity, which creates an increasing cost of compliance as the assessment score rises, requires careful consideration of the value to be achieved by increasing levels of maturity.

4 MATURITY SCORES FROM THE INITIAL 2019 AUDIT #

The audit using the process described at Section 3 had some urgency. Accordingly, individual interviews of 9 Team Leads and the Head Manager were conducted between 24 April 2019 and 9 May 2019. The results were documented and circulated for fact checking by each interviewee. All interview outcomes were documented and signed off by the interviewee. The final report of 71 pages, was submitted on 19 July.

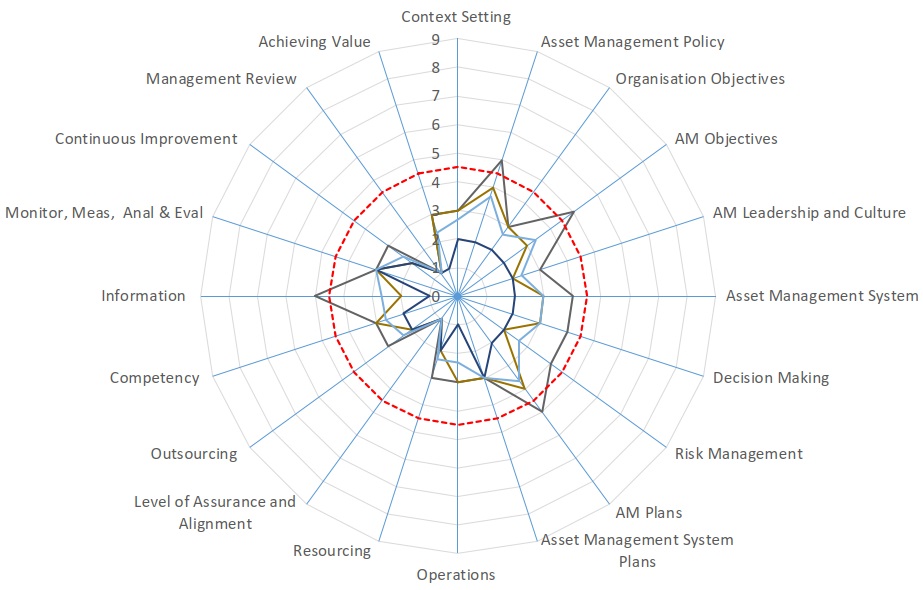

The initial Asset Management Maturity Audit of the ISP in 2019, indicated the lack of an Asset Management (management) System (AMS) as required by the contract. The performance produced low scores for virtually all Topics assessed as shown at Figure 1. The impact of the MSPO governance function’s asset management responsibilities on a number of the initial scores was noted in the report and it was observed that the Asset Management outcome is a team effort not just the effort of one party.

As shown at Figure 2 achieving ISO certification would likely require at least an average score of about 4.5 out of a maximum of 9 i.e. a mix of scores between 4 and 5 as a minimum across all criteria . Clearly certification would not be possible with the scores at Figure 1 where the average for each Topic was between 0.5 and 3.5. Additionally, as noted in over a decade of AM Council Excellence Awards Audits using similar criteria, the Criteria scores for immature organisations generally degrade across the quality management Plan, Do, Check and Act cycle. This is also clearly evident from the marks at Figure 2 as the audit progresses from assessing “Process” then “Implementation” then “Results”. As noted “Improvement” was not measured.

Figure 1: Radar Plot for Initial AM Maturity Audit Scores by Topic

5 INITIAL AUDIT OUTCOME #

Using Figure 1 as the performance source and noting that the scores for each Topic go from 0 to a possible 9, the current Asset Management performance was clearly quite immature. As noted, a score of about 4.5 for all Criteria in all Topics would be indicative of a capacity to achieve a formal ISO certification if desired. The outcome did not satisfy the contract requirements to establish an Asset Management Framework and supporting system that achieved the intent of ISO 55001. Something now needed to be done, and fast.

Based on the assessment outcomes, the MSPO leadership (including the ISP) accepted a program of follow up actions focused on rapidly improving their asset management maturity by:

- conducting a progressive “Mentoring Audit” with MSPO Asset Management Specialist in the role of Mentor, which is intended to encourage the understanding and adoption of a management system for asset management in line with the principles of ISO 55000 and the requirements of the ISO management system 55001;

- extending the coverage of the audit to the MSPO governance function as well the ISP in a joint interview process’

- encouraging engagement by including the first Tranche of the audit (Six selected Topics) in the contract Milestone payment for the next reporting period (a minimum average score of 3 across all criteria was to be achieved), funded from past unclaimed Asset Management milestone payments; and

- designing a low human resource impact audit program with progressive delivery that would grow capability without adversely effecting MSPO output imperatives.

6 THE MENTORING AUDIT 2020/21 #

The full Asset Management System maturity assessment comprising three Tranches was constrained to the MSPO which was only accountable for producing plans for the materiel system and contracting those plans out for delivery by other Service Providers. Thus, the “Materiel System” comprising maritime physical assets operated by others outside the MSPO, could not be included in the scope of the assessment. Only those aspects, which relate to the management system being the people, processes and information necessary to satisfy agreements with stakeholders for the development and delivery of the technical and management plans were included. Some of these stakeholder agreements are enforceable contracts such as those with the ISP and any outsourced arrangements from the ISP to others. Other internal providers had agreed Memorandums of Understanding or Agreements that are not enforceable at law..

The initial maturity audit outcomes recommended a number of process changes to create a “Mentoring Audit” (MA) approach that was focussed on progressive continuous improvement rather than achieving a particular threshold maturity score. The MA concept was new being a combination of an audit with the key mentoring characteristics of:

- Preparation: This required the Lead Auditor to meet with the two assessed parties to set expectations for the completion of a pre-audit template. The template captures succinct responses to the questions to be asked and progress with opportunities identified in the Initial Audit.

- Negotiation: During the Topic Audit session with both Governance and ISP parties present and physically together, discuss each of their roles when answering the listed question. Discussion is to include processes applied, measures made, results achieved and improvements identified and progressed. Objective quality evidence is provided and opportunities for improvement sought.

- Enable Growth: Opportunities are listed for all Topics. This produces a large number of potential improvements which must be filtered for duplication, grouping, priority, and development of project portfolios.

- Provide Closure: Recognise what has been achieved. Create a vision of what comes next in the AM journey to a desired maturity.

The improved MA process (incorporating recommendations for improvements to the Initial audit progress) was as follows:

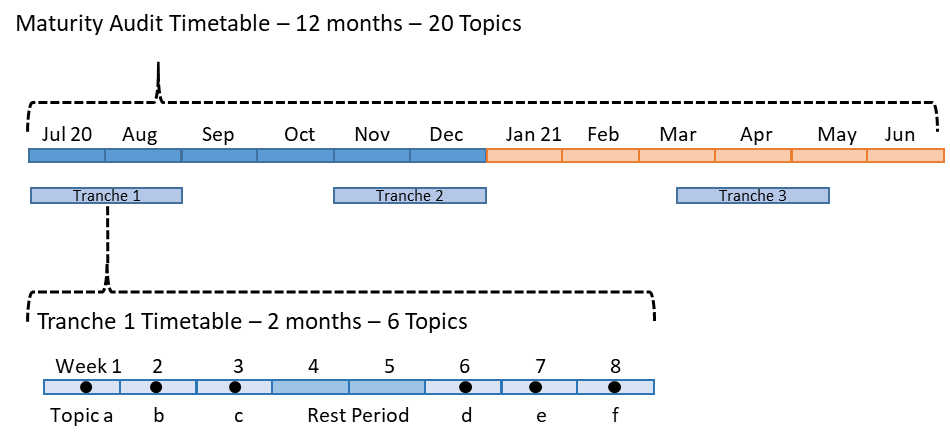

- The audit comprises three separate tranches of 6 to 7 Topics about 6 months apart. The 20 Topics (including a 9a and 9b) are depicted in the radar plot at Figure 3.

- The lead responsible person for the ISP and for MSPO Governance would respond jointly in the same room at the same time to each question;

- Both lead persons responding to the Topic pre-audit are to provide a written response to the question set of about one page maximum for each question. This is to prepare all parties for the audit response and provide time for the participants to prepare their evidence and any need for additional elaborating questions;

- The pre-audit response template is the basis of the conduct of the audit, with additional questions asked as necessary and the provision of evidence to support claims of conformance during the audit;

- The Tranche audits are conducted in two groups of three Topics, each at weekly intervals, with each group separated by a two week gap as shown at Figure 3. This intent may and did vary based on the availability of key persons and MSPO events;

- The Audit lead shall create a draft report for each topic audit. The draft is verified by the assisting auditors and fact checked by both CoA and ISP participants; and

- The completed individual reports shall be aggregated into a single Tranche report.

To encourage engagement, the first Tranche was linked to an existing AM milestone contractual payment by requiring each of the 6 Topics to achieve an average score of 3 or greater. The remaining Tranches audits were not subject to milestone payments. However, the conduct of Tranche 1 had successfully set the scene for the following Tranches with improved understanding of AM processes and the benefits. Financial reward closely linked to a measurable and achievable outcome achieved focus on the task at hand and the indicated the value assigned to AM maturity by the asset owners/operators and the responsible MSPO Governance function

The program described at Figure 2 was completed in the period allocated with the first audit interview conducted on the 26 June 2020 being Context Setting and the last audit interview conducted on the 14 April and the Final Draft Report submitted on the 3 June 2021.

Figure 2: 2020/21 Asset Management Maturity Audit

7 MENTORING AUDIT OUTCOMES #

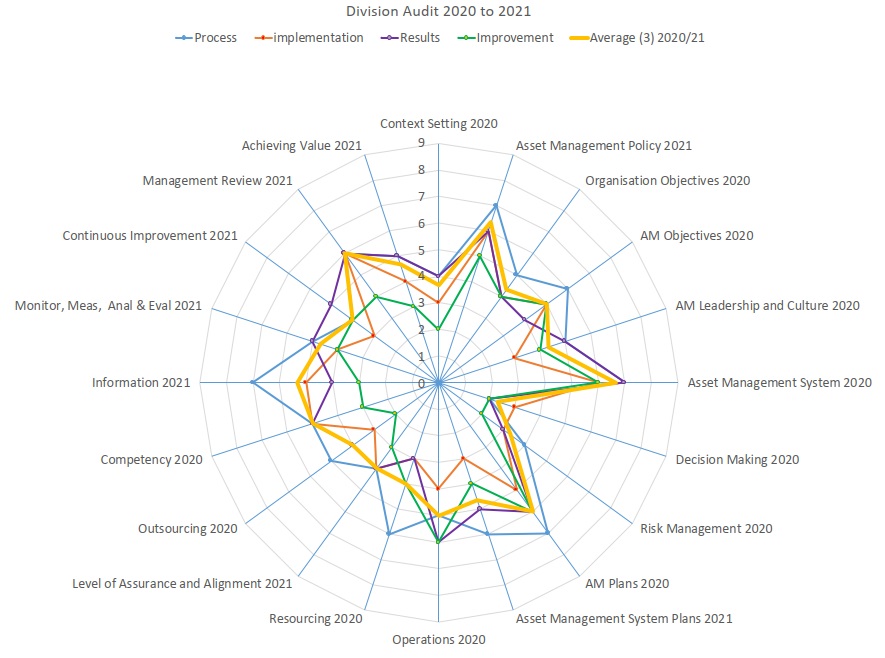

The scores achieved for each Topic and each criteria during the three audits are shown diagrammatically in the radar plot at Figure 3. The average score applies to only the first three Criteria to allow a valid comparison between the 2019 Initial Audit and the 2020/2021 full audit. The reduction in scores as criteria transition from Process defined to Implemented to Measured to Improved are readily evident. Also evident is the modest score of arguably two of the most important Topics after setting Objectives being Decision Making and Risk Management. Without the robust connective tissue between Organisational Objectives and Asset Management Plans an effective asset management capability will never be achieved.

Figure 3: Topic Scores for each Criteria and the Topic Average.

The initial 2019 audit identified 84 opportunities. The Mentoring Audit produced another 226 opportunities across three Tranches. Many of these opportunities were duplicates effecting multiple Topics. One specific example was the large number of quality management issues effecting multiple Topics as identified in Tranche 1. A summary of the opportunities by Tranche is provided at Table 4 noting that the numbers shown include some duplicates. This table is slightly misleading as Tranche 1 created a set of noted opportunities (100+) that would overwhelm the system both in numbers and our ability to aggregate into a portfolio of projects of repeated in Tranches 2 and 3. Some self-regulation during the subsequent Tranche audits kept the opportunities in Tranches 2 and 3 to manageable bounds.

| Initial Maturity Audit (Issues and Opport) | 84 |

| Tranche 1 Opportunities | 101 |

| Tranche 2 Opportunities | 73 |

| Tranche 3 Opportunities | 52 |

| Tranche Total | 226 |

| Grand Total 310 | |

Table 4: Identified Opportunities for Improvement by Tranche

Based on the Topic scores and the overall improvements achieved by each Tranche listed at Table 5, the following interpretations for the differences are advanced:

- The complete set of 101 questions focusses more on the asset management system and its process maturity.

- Improvement actions grew organically in MSPO with no formal project plan or overarching resource allocation to resolve the identified opportunities from the initial program (a form of gap identification).

- The MSPO rate of change from the initial 2019 scores to results achieved in Tranche 1 to 3 show the rate accelerating after Tranche 1 then decelerating after Tranche 2 as shown in the following Table 5. This reflects the increased time available for Tranche 2 improvements from the initial audit increasing and the difficulty of achieving improvement as the audit scores increase from 0 to 8.

| Tranche 1 – % Improvement from Initial Audit | 45.8% |

| Tranche 2 – % Improvement from Initial Audit | 78.4% |

| Tranche 3 – % Improvement from Initial Audit | 87.0% |

Table 5: Percentage Improvement of Topic Scores from Initial Audit

Tranche 1 audit was 12 months after the 2019 audit with Tranche 2 being 18 months after and Tranche 3 some 22 months after the initial audit. As Table 5 demonstrates this essentially organic growth, without the assistance of outside resources,

was changing the business. As time progressed “getting better got better”. A deeper understanding of what Asset Management truly meant was percolating across the MSPO, not from high cost workshops, training or targeted programs of change, but from leadership:

- Taking accountability for audit delivery;

- Involvement with staff in developing pre-audit response documents; and

- Fact checking the reports and lists of opportunities identified.

Doing the audit and the hard work of management commitment to improvement including the involvement of a large number of personnel engaging with the MSPO Asset Management SME while completing the pre-audit questionnaires / reviewing prior opportunities creates change, it wasn’t just about being told to ‘do better’. It’s the journey that matters, not just the end score.

8 COMPARATIVE CASE STUDY #

Audits must deliver value for money beyond just the comfort given. While this value may be difficult to measure directly, a comparative assessment of results achieved by another like sized organization over a similar time period from a similar start point can provide some insight into the advances made during the entire ”end to end” process. Fortunately, just such a case study was published recently by the Asset Management Council. In 2018, Southern Rural Water (SRW) commenced a two year maturity journey following an initial maturity assessment. The need to improve asset management maturity was driven by the requirements of the Victorian Government Asset Management Assurance Framework (AMAF) mandated by Victorian Treasury to enforce compliance with a number of financial Acts of Parliament.

The SRW audit process applied public domain questions sets such as those from the Institute of Asset Management or the Global Form on Maintenance and Asset Management which have much in common with MSPO audit process. The asset base is less expensive at A$1.3 Billion but the widespread nature of a network of irrigation pipes, ditches, weirs and SCADA for flow measurement plus seven major dams requiring compliance to ANCOLD (Australian National Committee on Large Dams) safety and risk management guidelines, creates a complex mix of requirements.

In comparing resource efforts of internal staff the direct involvement in the maturity assessments were:

- Southern Rural Water (SRW) – 16 half day workshops;

- MSPO – 20 by one to two hour question and answer audit sessions and 20 pre-audit template completed by Team Leaders.

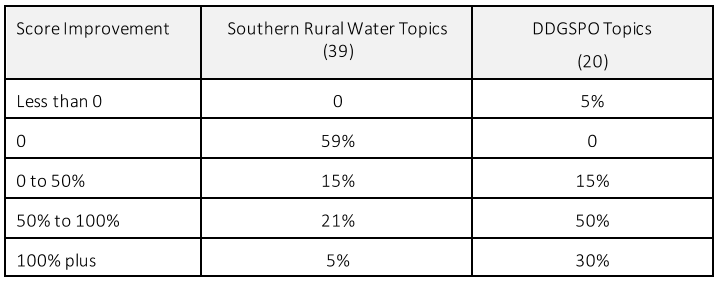

Figure 4 below shows a comparison of the two improvement outcomes on a similar radar plot where Green represents the starting point and Orange represents the end point. MSPO is on the Left Hand side and SRW on the Right Hand side.

Figure 4: Comparative Radar Plot of 2 Year AM Maturity Improvement Programs – MSPO and SRW

The 39 assessment points for Southern Rural Water reflect their origins in the Institute of Asset Management and the Global Forum on Maintenance and Asset Management (GFMAM) Asset Management Landscape including Subject Areas. Some of the 39 points are not specifically required in an Asset Management System by the ISO standard such as “Shutdowns and Outage Strategy” or “Reliability Engineering” or are subsets that may not be required by all industries. The Subject Areas are described in the GFMAM document The Asset Management Landscape Second Edition.

In comparing the two organisations’ outcomes, the following statistics on the two-year improvement effort at Table 6 are

noted. In both MSPO and SRW, the ISO certification points are similar with a scoring midpoint being a 4.5 for MSPO and at 2.5 for SRW. Key observation for SRW is that for 59% of the assessment points had no change in score.

Table 6 Comparative Improvements in Maturity Score

Based on these scores and the overall improvements achieved by each Tranche shown at Table 6, the following interpretations for the differences are advanced:

- The MSPO set of 20 topics and associated questions better focusses on the asset management system and its process maturity.

- Improvement actions grew organically in MSPO with no formal project plan or resource allocation to resolve the 100 identified opportunities from the initial program (a form of gap identification)

- The MSPO rate of improvement from the Initial Audit scores to results in Tranche 1 to 3, show the rate of change decelerating after Tranche 2 as shown in Table 5. This reflects the increasing difficulty of improvement as the maturity scores increase.

9 OBSERVATIONS #

Five core observations were noted when summing up the results of the MSPO Asset Management maturity assessment program:

-

- Many advances in maturity were achieved over the two years. However, they are from a low base and likely unsustainable beyond current scores of 5 to 6 out of 9 using existing ad hoc approaches to change.

- As observedin the slowing of improvement as scores rise and as advised in the initial audit, the marking is not linear in effort but exponential in nature. Moving beyond an average score of 4 to 5 will become increasingly difficult and clear priorities and targets will become necessary to allocate resources appropriately.

- Step advances in performance can be best achieved by an in-house capability that is well led technically and culturally.

- The Mentoring Audit was a drawn out process with much effort and time spent preparing responses. It should only need to be done once to establish a baseline that is broadly compliant with ISO 55001. Notwithstanding, a defined measure of required AM maturity will be required in the future to asses status and inform continuous improvement efforts.

- The MSPO is close to a level that would achieve an Independent ISO 55001 certification for its scope. Certification to the ISO though is not considered good enough for the criticality of the operations supported nor the requirements of their stakeholders. The challenge that MSPO’s scope does not include the operations of the physical assets being supported would also need to be addressed.

This result would not have been achieved during the short burst of formal Certification. The large numbers of opportunities (200+) discovered, if identified in a formal audit, would have overwhelmed the participants and challenged growth. The mentoring approach created an audit that was the AM Journey itself not just a measure.

10 ACKNOWLEDGEMENTS #

The authors wish to thank the many people involved in this effort and its clear success. Without their efforts this progress would not have been achieved. Thanks specifically go to the Leadership Team that made it happen and especially the impact of CAPT Grant McLennan, Director and Mr Neil Comer, ISP Program Manager whose relationship building efforts drove the leadership and culture score up by 86% in the 12 months between the initial and mentoring audits.

11 ABBREVIATIONS #

AM Asset Management

AM Council Asset Management Council

DCPAS US Civilian Personnel Advisory Services

GFMAM Global Forum on Maintenance and Asset Management IAM Institute of Asset Management

ISO International Standards Organisation

ISP Industry Service Provider

MA Mentoring Audit

MESA Maintenance Engineering Society of Australia (predecessor to AM Council)

MSPO Maritime System Program Office

RAN Royal Australian Navy

12 BIBLIOGRAPHY #

Internal Reports Capability Acquisition Sustainment Group, Maritime System Program Office, 2019 to 2021

AECOM Australia, Southern Rural Water’s Asset Management Journey, Asset Management Council, The Asset Journal Issue 02 Vol 15, June 2021

GFMAM, Asset Management Landscape Second Edition , ISBN 978-0-9871799-2-0, Mar 2014 DCPAS, DoD Mentoring Resource Portal, Stages of Mentoring,

https://www.dcpas.osd.mil/Content/Documents/CTD/StagesofMentoring.pdf,